Using NVIDIA GPU Resources on Kubernetes Table of Contents

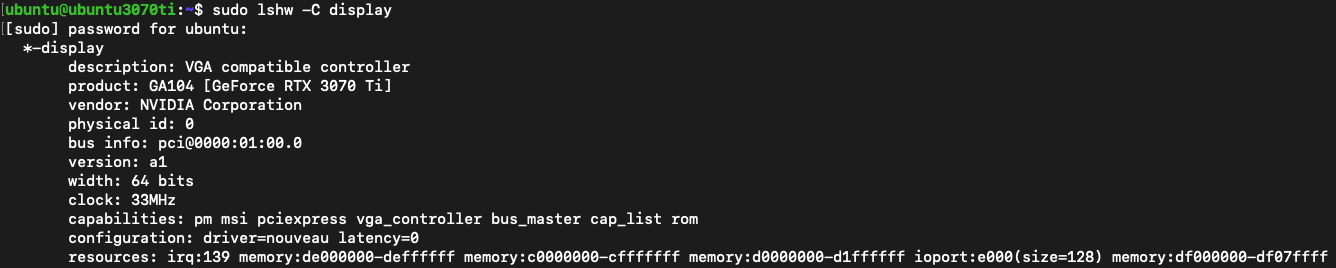

Remove default driver Check the default driver is existing or not

sudo lshw -C display

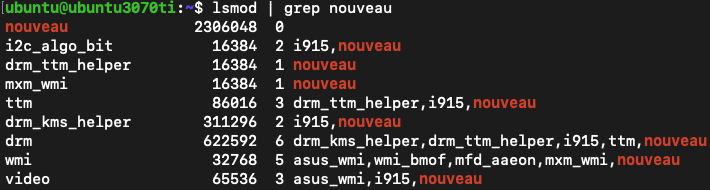

List the default driver

lsmod | grep nouveau

If "nouveau" appears, it means there is a default driver.

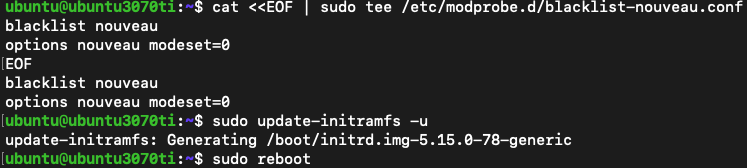

Delete the default driver and reboot

cat <<EOF | sudo tee /etc/modprobe.d/blacklist-nouveau.conf

blacklist nouveau

options nouveau modeset=0

EOF

sudo update-initramfs -u

sudo reboot

Install Nvidia CUDA

sudo apt-get update -y

sudo apt install -y build-essential linux-headers-$(uname -r) wget

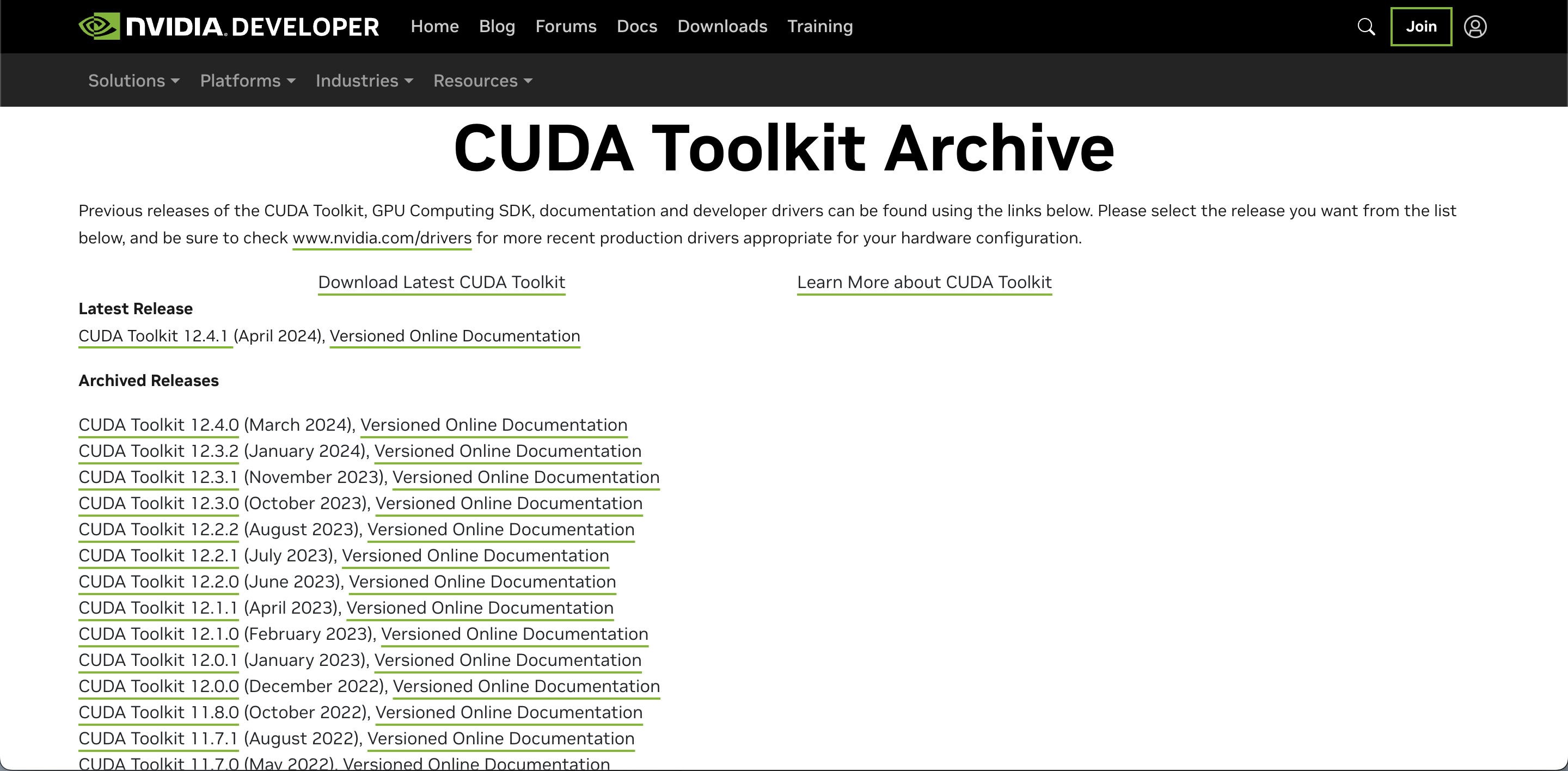

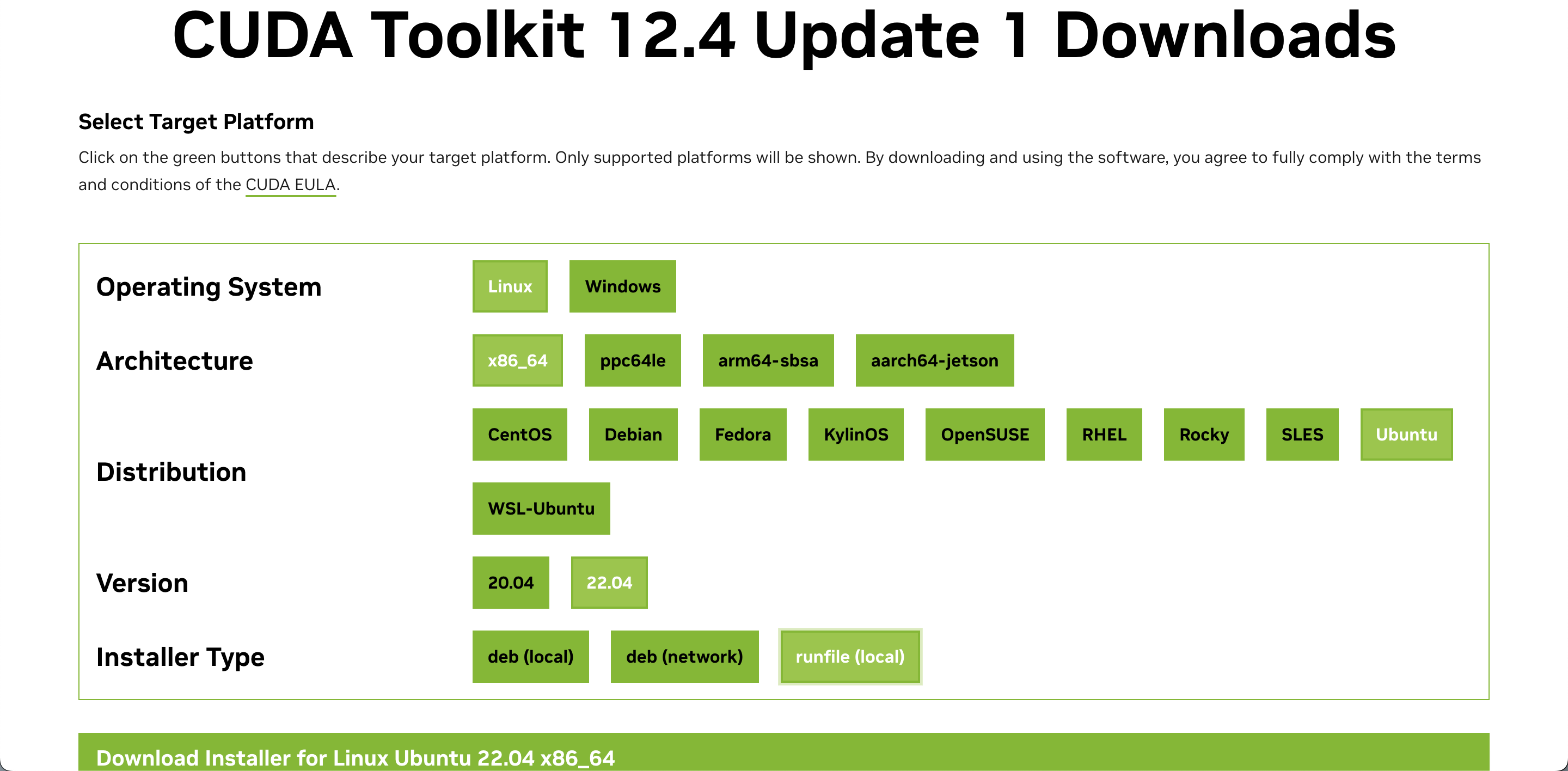

Download the required CUDA Toolkit version from the NVIDIA official website

NVIDIA CUDA Toolkit Official Download Website

The environment demonstrated here is

wget https://developer.download.nvidia.com/compute/cuda/12.4.1/local_installers/cuda_12.4.1_550.54.15_linux.run

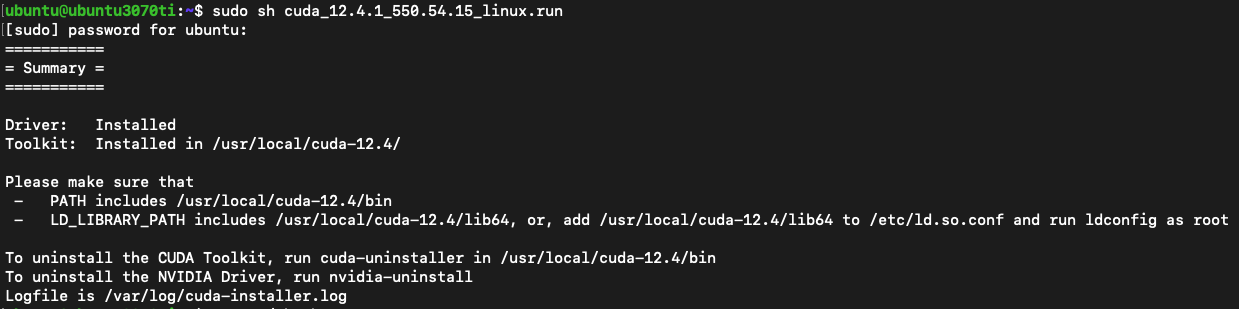

sudo sh cuda_12.4.1_550.54.15_linux.run

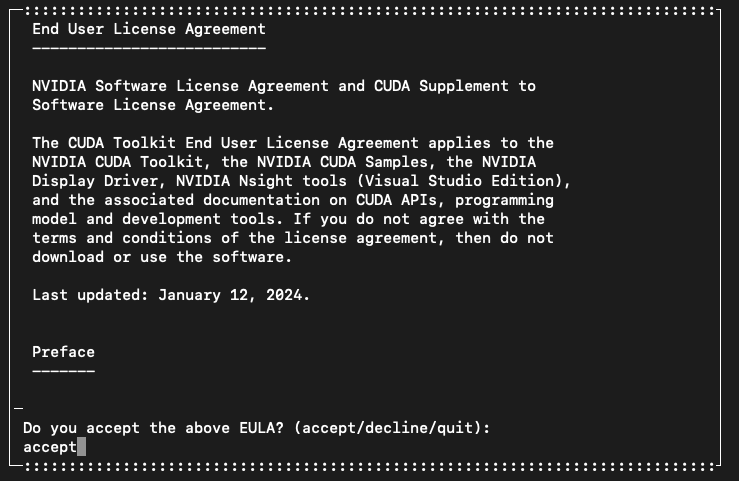

After execution, you will see a UI-like installation menu. Enter accept to accept the terms of use

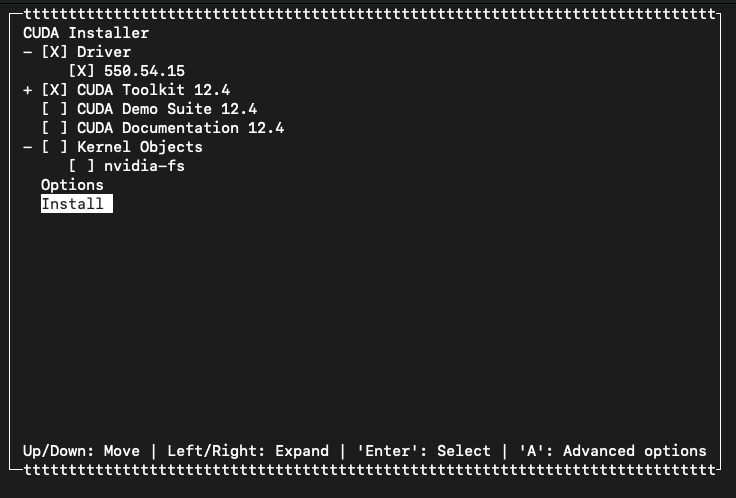

Use the space bar to select "Driver" and "CUDA Toolkit"

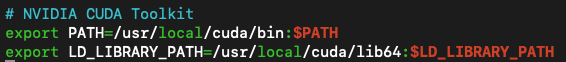

After the installation is complete, add the following two lines to the end of ~/.bashrc

nano ~/.bashrc

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

source ~/.bashrc

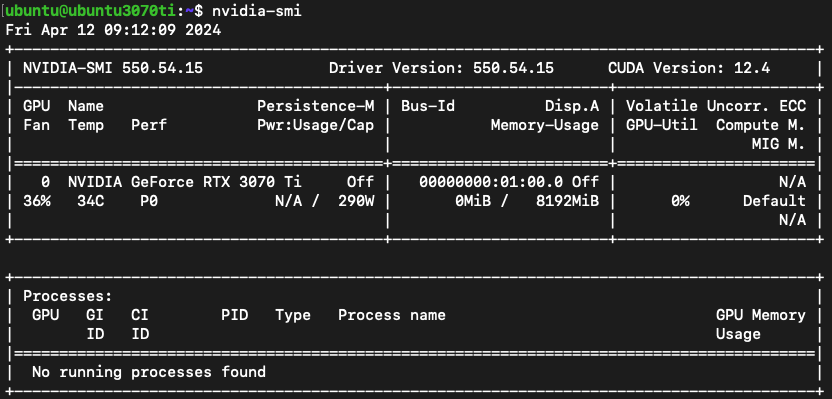

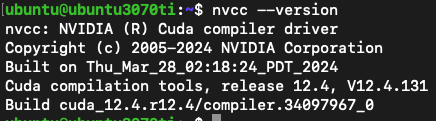

Check NVIDIA CUDA

nvidia-smi

nvcc --version

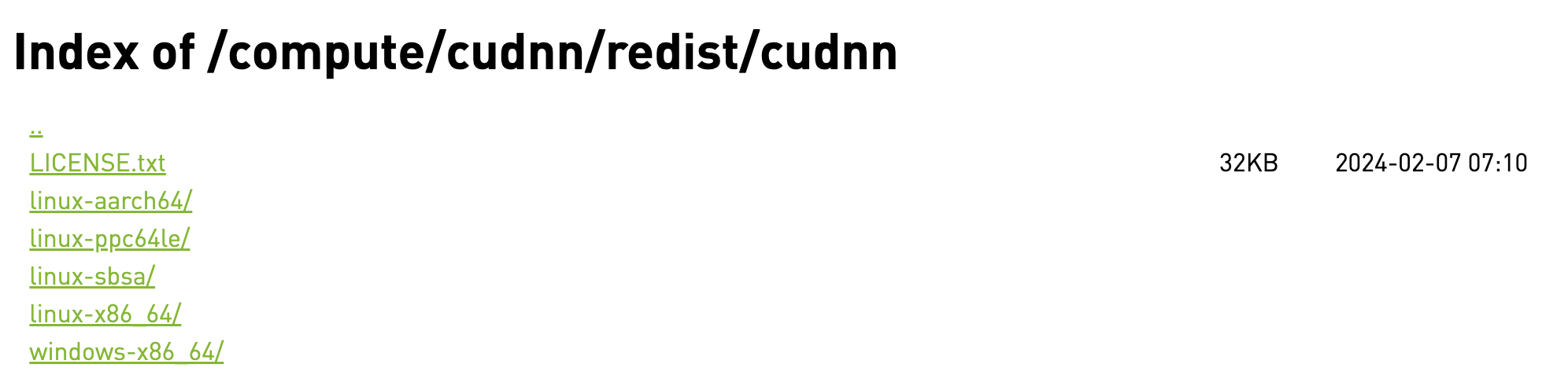

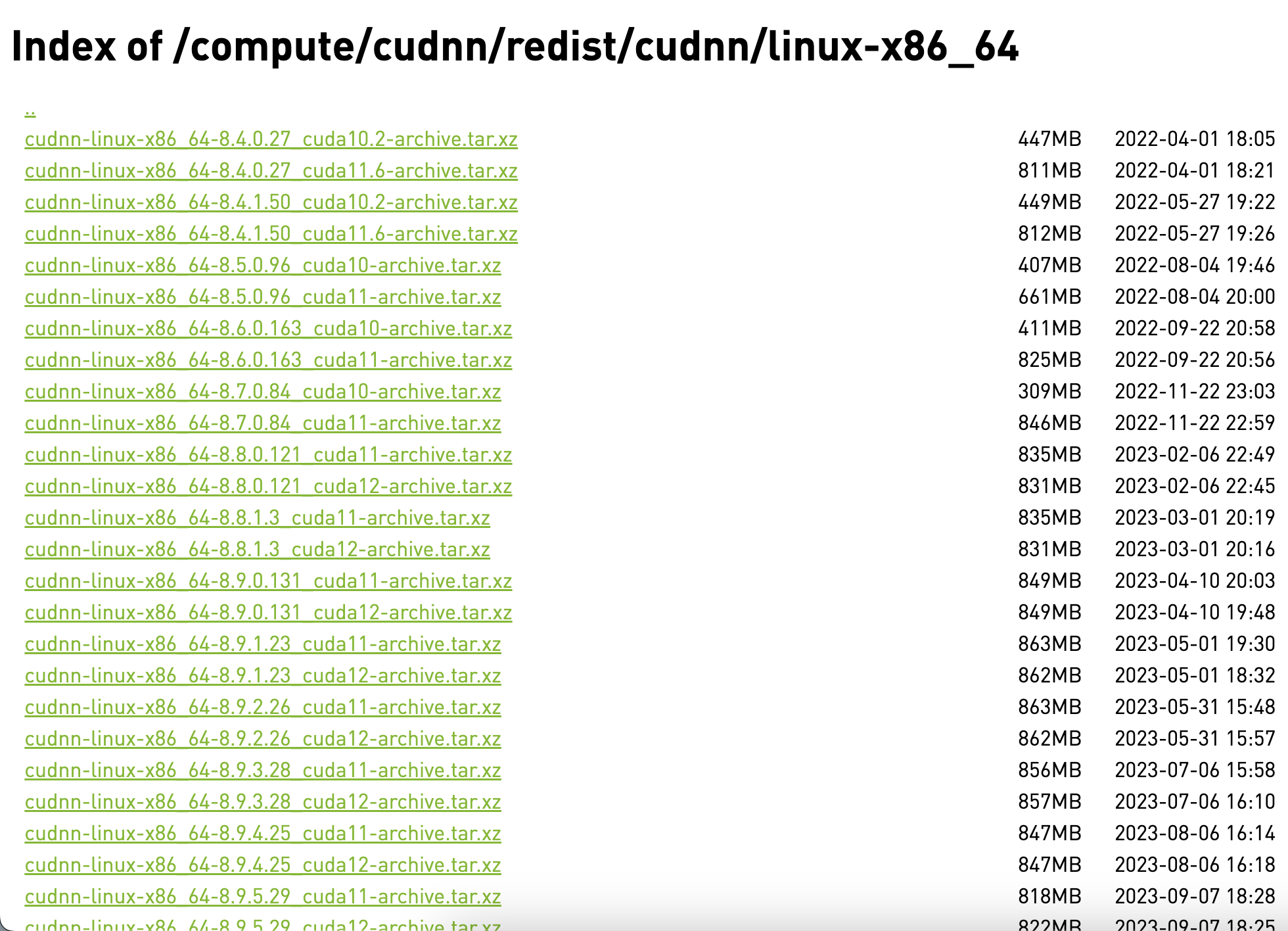

Install NVIDIA cuDNN Download the required cuDNN version from the NVIDIA official website

NVIDIA cuDNN Official Download Website

The system environment here is Ubuntu 22.04 x86_64, so choose linux-x86_64/

Here we take cudnn-linux-x86_64-8.9.7.29_cuda12-archive.tar.xz as an example

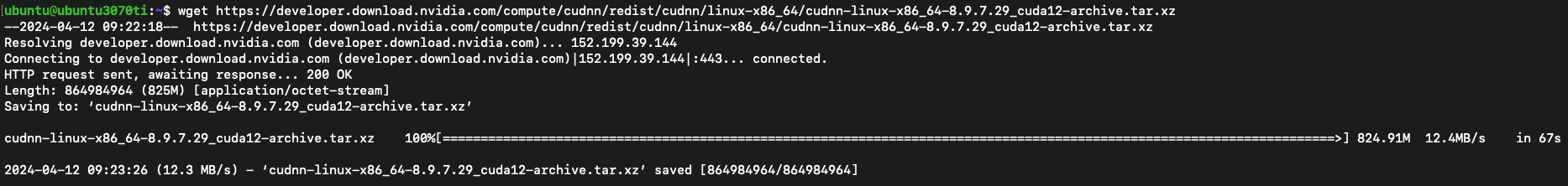

wget https://developer.download.nvidia.com/compute/cudnn/redist/cudnn/linux-x86_64/cudnn-linux-x86_64-8.9.7.29_cuda12-archive.tar.xz

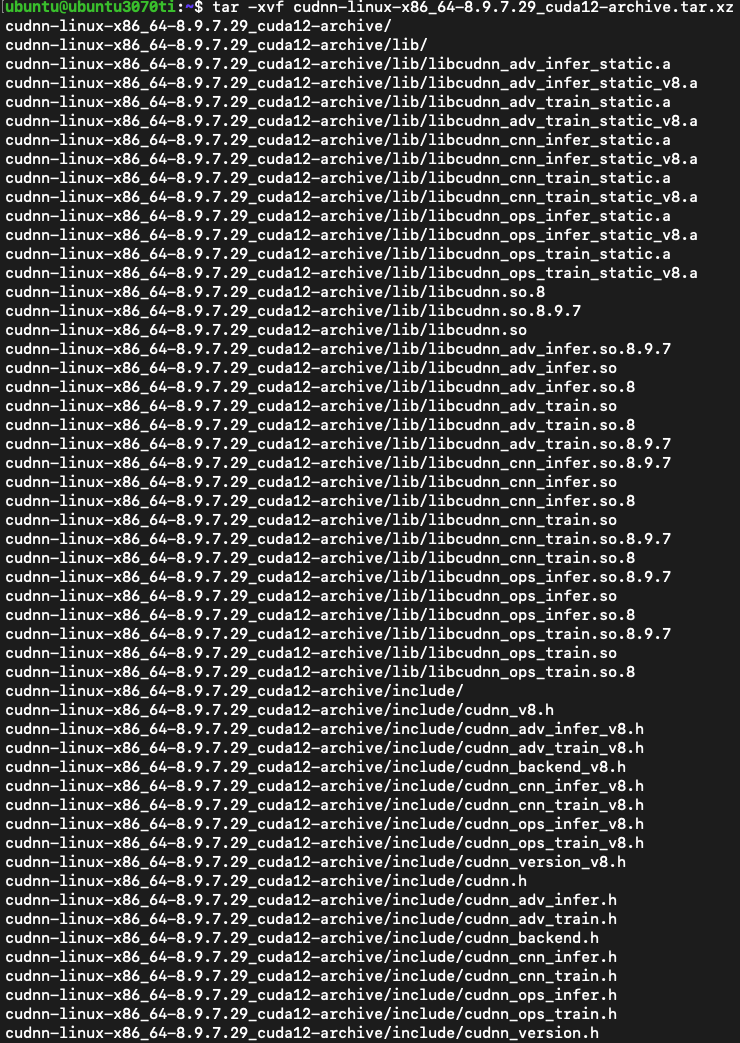

tar -xvf cudnn-linux-x86_64-8.9.7.29_cuda12-archive.tar.xz

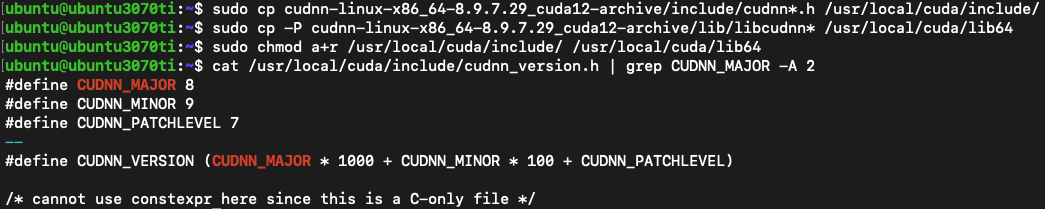

sudo cp cudnn-linux-x86_64-8.9.7.29_cuda12-archive/include/cudnn*.h /usr/local/cuda/include/

sudo cp -P cudnn-linux-x86_64-8.9.7.29_cuda12-archive/lib/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/ /usr/local/cuda/lib64

cat /usr/local/cuda/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

Install DKMS

sudo apt-get update -y

sudo apt install -y dkms

# NVIDIA Driver Version 可以透過 nvidia-smi 取得,例如:550.54.15

sudo dkms install -m nvidia -v <NVIDIA Driver Version>

NVIDIA Container Toolkit Official Installation Guide

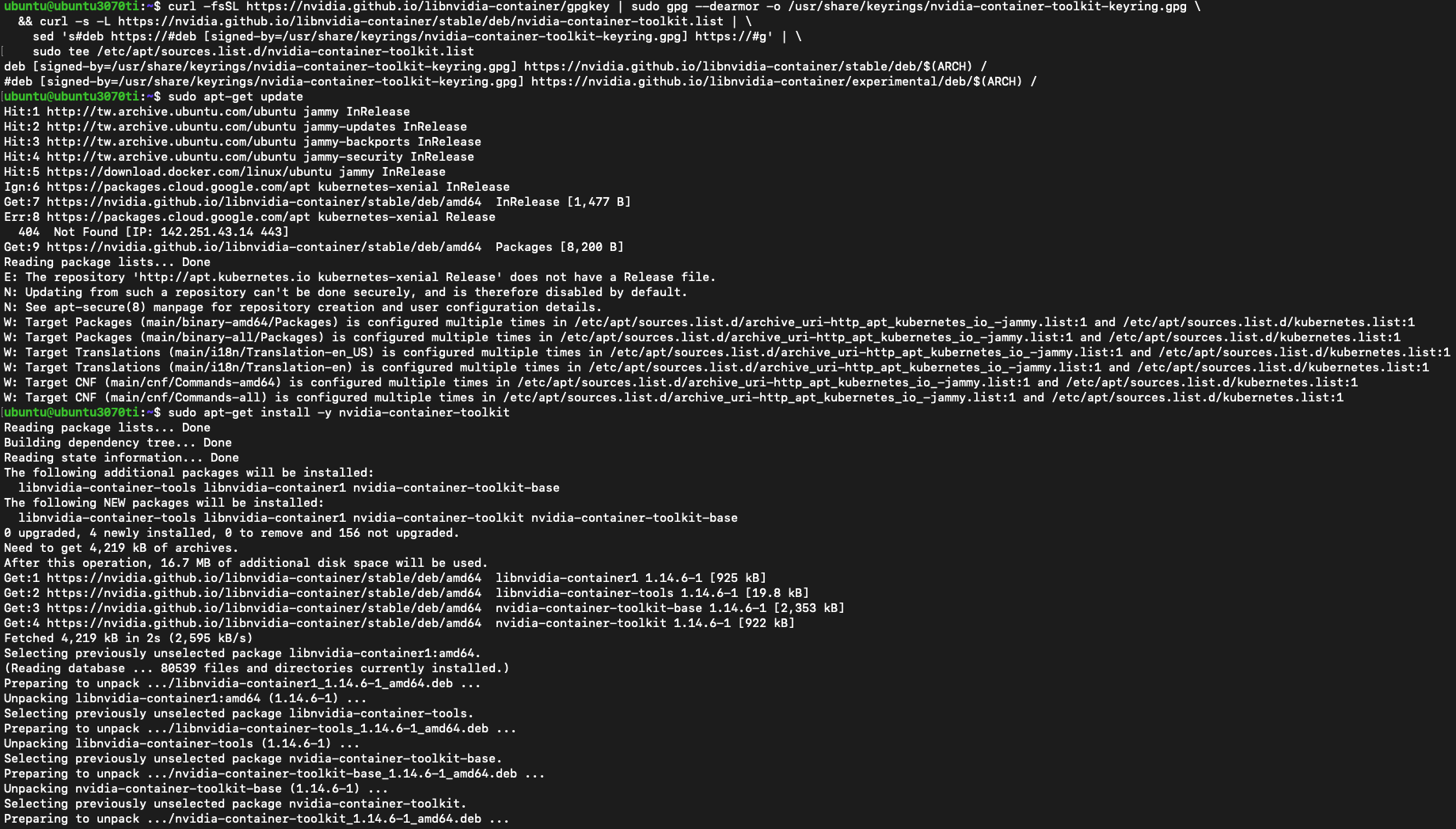

Installing with Apt Configure the production repository

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

Update the packages list from the repository

sudo apt-get update

Install the NVIDIA Container Toolkit packages

sudo apt-get install -y nvidia-container-toolkit

Configuration Configuring Docker

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart docker

Confirm whether /etc/docker/daemon.json has correctly configured NVIDIA GPU runtime, similar to the following information

cat /etc/docker/daemon.json

{

"exec-opts": [

"native.cgroupdriver=systemd"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

},

"storage-driver": "overlay2"

}

If you follow the official steps but do not automatically set default-runtime to nvidia, you need to manually add it.

sudo nano /etc/docker/daemon.json

sudo systemctl daemon-reload

sudo systemctl restart docker

Configuring containerd (for Kubernetes)

sudo nvidia-ctk runtime configure --runtime=containerd

sudo systemctl restart containerd

Install Kubernetes NVIDIA Device Plugin Kubernetes NVIDIA Device Plugin Official GitHub Repo

Deploy nvidia-device-plugin DaemonSet to Kubernetes Cluster

kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.14.5/nvidia-device-plugin.yml

Check Pod can run GPU Jobs or not

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda10.2

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

tolerations:

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

EOF

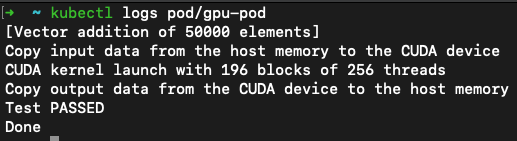

kubectl logs pod/gpu-pod

Outputting Test PASSED means that GPU resources are successfully used in the Pod.

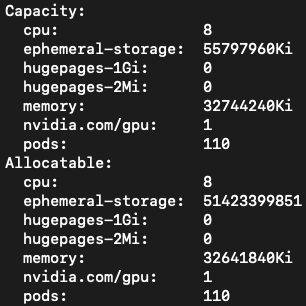

Check node can use GPU resource or not Check whether Capacity and Allocatable are displayed nvidia.com/gpu

kubectl describe node <Worker Node name>

# Example

kubectl describe node ubuntu3070ti

Last modified: 01 October 2024